My name is Saptadeep Debnath, and I'm a Robotics Engineer with a passion for innovation and problem solving. With a Master's degree in Electrical and Computer Engineering, specializing in Robotics, from the University of Michigan, I have extensive experience in designing and developing advanced robotic systems.

As a Robotics Engineer at Equipment Technologies, Inc., I lead the Vision-Based Advanced Driver Assisted System initiative as the Product Owner, driving product innovation and improving operational efficiency. I designed an advanced CNN-based semantic segmentation network that precisely predicts crop rows for CAN-linked machine steering, delivering precision and accuracy. I built a state-of-the-art ROS architecture pipeline from scratch, ensuring seamless message relay from the prediction software to the steering control manager, optimizing operations and minimizing downtime.

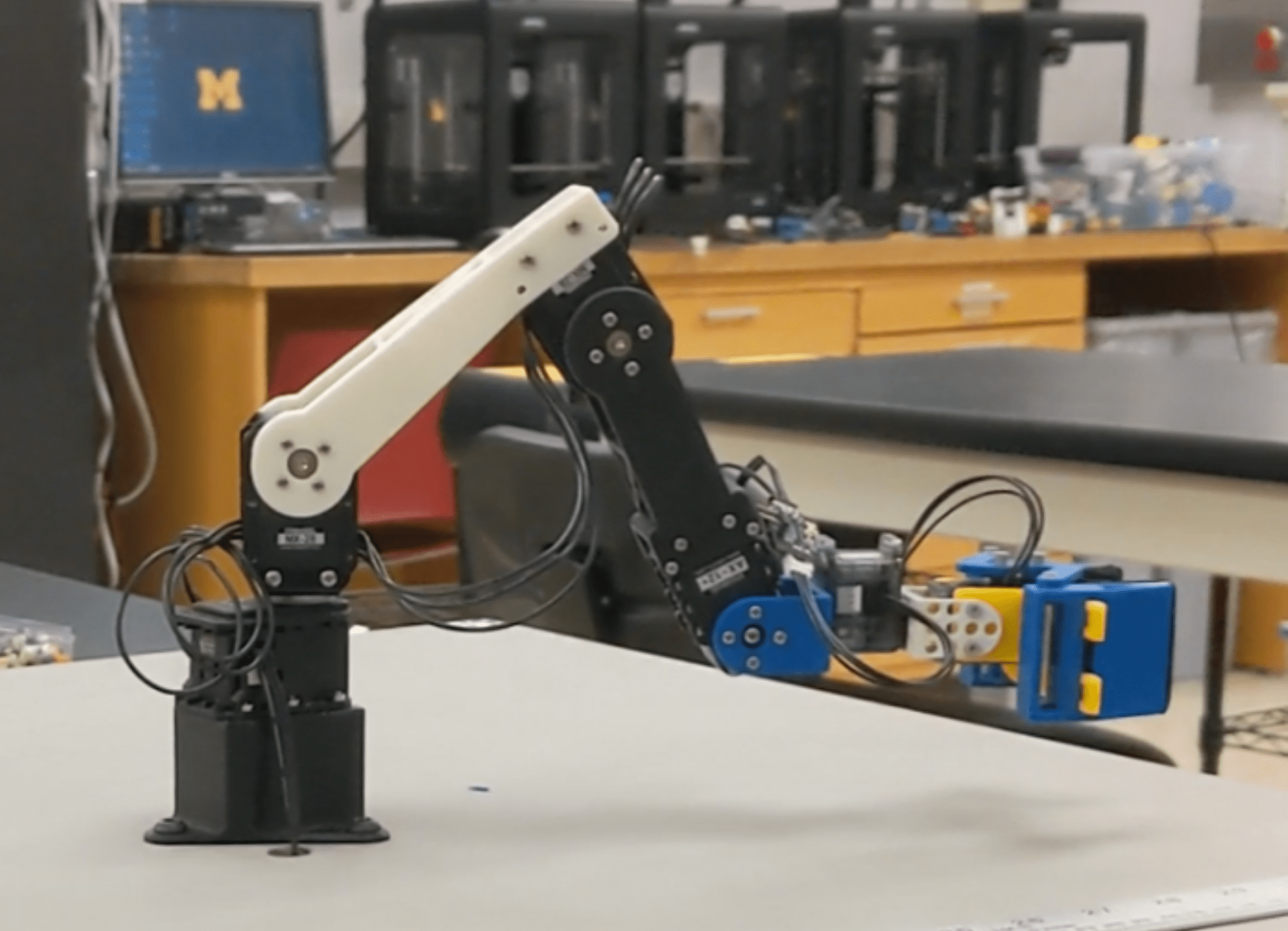

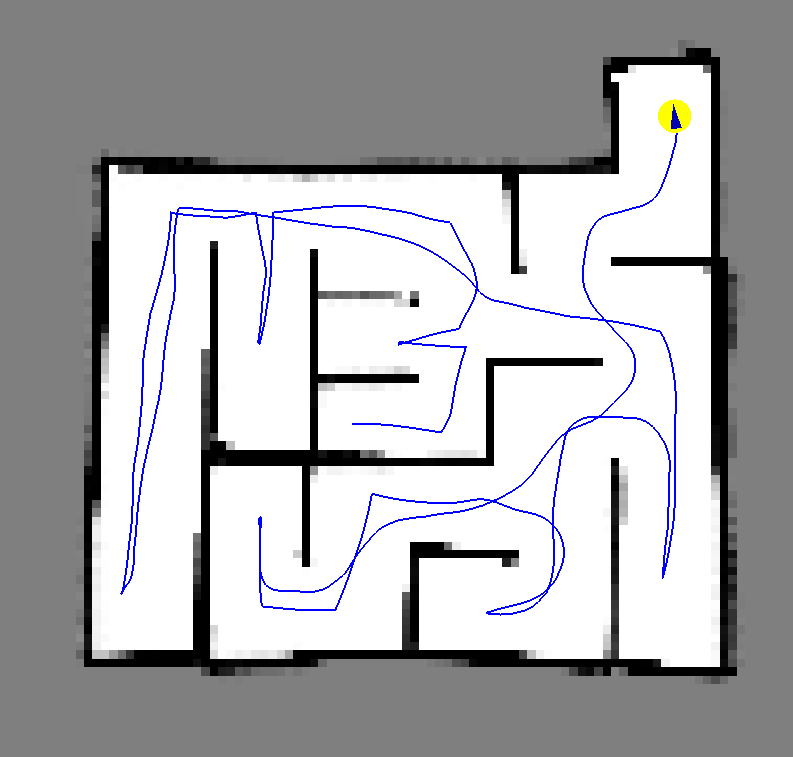

As part of my undergraduate thesis at Fulda University of Applied Sciences, I explored LSTM network performance by adjusting datasets and built a ROS pipeline to control a robot with 98% accuracy via real-time freehand gestures.

My concentration areas include Robotic System Design, Machine Vision, Deep Learning, and Control Systems. I'm proficient in programming languages such as Python, C, C++, Bash and HTML. I also have experience using tools and technologies such as the Robotic Operating System (ROS), OpenCV, PyTorch, NVIDIA Jetson (Xavier AGX), and Machine Vision Cameras (Intel Realsense, ZED2i).

Please take a moment to explore the different tabs on my portfolio website and check out some of my highlighted projects. If you have any questions or would like to reach out to me, please don't hesitate to ping me at saptadeb@umich.edu